RealMe, Digital Identities, weak law & function creep.

Please forward and share. Yes this is dry - but just perhaps, it's kinda' important. 25 min read

DIGITAL IDENTITY – THE RHETORIC OF TRUST

NEW ZEALAND’S DIGITAL ENVIRONMENT

How does New Zealand’s digital identity system, and prospective digital identity ‘Trust Framework’ – work? Digital identity systems potentially represent a paradigm shift in power. Much of this is occurring out of sight of the public - and public consultation has at best, been mediocre.

Digital ID systems and potential legislation - the Digital Identity Services Trust Framework Bill - will interweave with privacy laws. As with any prospective regulatory system, ‘safety and efficacy’ will hang on the potential for regulators to apply investigative, or inquisitorial powers, and be resourced to undertake their work.

After asking a couple of expert academics about RealMe system architecture and data governance oversight, and finding that they didn’t know either, I now presume there is massive public ignorance concerning the sociopolitical, democratic and ethical dilemmas that pervade New Zealand’s digital identity landscape.

This is my take - on how RealMe works, on the processes of public consultation in 2019/2020 - then the consultation to Digital Identity Services Trust Framework Bill.

I firmly believe that the ‘trust’ rhetoric is not buttressed by a robust policy framework. I believe there is no capacity for foresight, and the legislation will not protect the public from misappropriation of power.

Correct me – if I am incorrect.

THE PRIVACY ACT 2020

New Zealand’s Privacy Act 2020 is the overarching legislation providing the powers for agencies to collect, use, disclose, store and give access to personal information. The Privacy Act is designed to promote and protect privacy by:

‘providing a framework for protecting an individual’s right to privacy of personal information, including the right of an individual to access their personal information, while recognising that other rights and interests may at times also need to be taken into account.’

However, the principles, set down in section 22, help us to understand that privacy concerns the risk to an individual when data is used for a particular purpose. The Privacy Act does not prima facie have regard to the erosion of rights over time, nor the potential for abuse of power by the state.

Privacy Act Part 7 contains rules for the sharing, accessing and matching of personal information. Approved information sharing agreements (ASIAS) are agreement between or within agencies that enables the sharing of personal information.

In order to recognise just how agency cross-talk is enabled, Schedules 2-4 of the Privacy Act, show the inter-agency information sharing information, including which agencies have approved information sharing agreements (ASIAS). Schedules 5-6 reveal which legislation permits information matching.

Note that the New Zealand Law Commission states that ‘schedules can also be of equal importance to or even greater importance than material in the body of the Act.’ It’s not difficult to imagine that few limits are placed on executive power to access your information.

The Privacy Commissioner depends on organisations to report through the NotifyUs mechanism to identify the majority of privacy breaches. Yet individuals working inside government face huge social, psychological and economic barriers in making complaints, and whistleblowers allegations often follow years of system failure.

I asked the Privacy Commissioner for

The sole requirement concerned an obligation for businesses and organisations to ensure that personal information transferred overseas is adequately protected (Information Privacy Principle (IPP) 12).

The request was otherwise refused as the Privacy Commissioner does

‘not hold the information you have requested to the extent that your request relates to contracts clauses or policies adopted by particular agencies.’

Who is doing this work then? If ASIA legislation comes under the Privacy Act?

RealMe, the New Zealand Governments’ Digital Identity Service

The Privacy Act is intended to protect individuals who use government identity verification services such as RealMe.

RealMe was introduced in 2012 before market-based decentralised identity services were common. Over 1 million people have a verified identity on the platform. A RealMe account is required for visitor visas; and across tertiary institutions the RealMe system is promoted as the most convenient option for student enrolment.

RealMe is governed primarily through two Acts. The Identity Information Confirmation Act 2012 allows public and private sector agencies to corroborate citizenship, passport, as well as Births, Deaths and Marriages registry functions. The Electronic Identity Verification Act (EIVA) 2012’s purpose ‘is to facilitate secure interactions (particularly online interactions) between individuals on the one hand and participating agencies on the other.’ We can see that principles, incorporated at high level in the EIVA legislation, ensure people have choice to join and

‘complete discretion to decide whether to apply for an electronic identity credential to be issued to him or her and whether to use it at all if it has been issued.’

While the Act states that an individual’s consent must be secured in order for the service to supply information to another agency, the Act also states that this does not override provisions in other Acts. Therefore, we might guess, that via the ASIA’s mentioned above, ensure that inter-agency cross-talk is relatively unimpeded.

There are two elements to the RealMe service – a login and a verified identity. A photograph is required in order to have a RealMe identity. As I have noted, the biometric data held by the DIA includes facial images and liveness testing. The liveness test is in the form of a video.

The New Zealand government has replatformed the RealMe login service to a Microsoft cloud-based solution.

‘It is expected that Microsoft will continue investing in the product to support future improvements to user experience and security.’

In 2022 Google announced New Zealand would become a ‘cloud region’.

After logging in through RealMe, the information is then retrieved from the DIA's identity verification service. The DIA's identity verification service privacy statement on the RealMe site is quite specific, it concerns

‘our treatment of your personal information if you apply for a verified identity to be able to verify your identity online’.

Therefore, I understand that when the Electronic Identity Verification Service Act refers to a service, this specifically means an Electronic Identity Verification Service – which is the second element of the RealMe service, the verified identity information held by the DIA.

Who’s responsible?

The Department of Internal Affairs administers the Electronic Identity Verification Act 2012 and the Identity Information Confirmation Act 2012, while the Ministry of Justice administers the Privacy Act. The Department of Internal Affairs has oversite over a tremendous quantity of information. There is little public oversight concerning backend operations of the DIA's identity verification service.

It’s decoupled from RealMe, which is the public interface, but what happens behind the scenes is black boxed. It’s unclear how information held with the intelligence and law enforcement agencies is aggregated with DIA data. Schedule 2 Privacy Act lists current ASIAs. The first year of the pandemic coincided with an increase in ASIAs.

One assumes that the Electronic Identity Verification Act 2012 sets the standards for the DIA's identity verification service – however the Acts purpose seems to focus on facilitating secure interactions between individuals and agencies. This is quite different from ASIAs, which are agency to agency. As the Act clarifies in Section 39 – individuals that sign up to an identity scheme can’t prevent cross-agency information transfers when government agencies are parties to an ASIA.

Identification, or identity proofing,

‘verifies and validates attributes (such as name, birth date, fingerprints or iris scans) that the entity presents.’

An individual may share attributes with a broader digital identity user population. Personal attributes can include data points such as IP addresses, activity history and location. Theoretically the individual has control over what attributes are shared, but inter-agency government agreements over-ride this.

It appears that the Privacy Act does not limit the collection of biometric or identity information, and the principles are weakly worded. The Electronic Identity Verification Act 2012 focusses on secure online interactions, and the Digital Trust Framework Bill concerns the governance of service providers such as RealMe as well as the DIA's identity verification service.

This is why separation of powers to review information sharing activity is critical.

Yet the underfunding of regulatory powers is already evident in the resourcing provided to the Privacy Commissioner with regard to oversight and compliance. Compliance and enforcement is a new primary activity of the Privacy Commissioner, and these activities were previously lodged under the Information Sharing and Matching output class. With only a NZ$2 million p.a. budget and a team of four dedicated staff engaged in active compliance and enforcement to investigate systemic problems, it’s impossible to expect that the Privacy Commissioner has oversight on what is happening behind departmental doors with regards to private citizen information, across a government sector with a NZ$100 billion budget.

There is no meaningful stewardship scheme beyond a small $2 million budget held with the Privacy Commissioner, and new legislation coming through the door is absent inquisitorial power.

THE DIGITAL IDENTITY SERVICES TRUST FRAMEWORK BILL

Digital identity systems make money. The significant potential economic and social benefits of digital identity are estimated to be worth between 0.5% and 3% of GDP – at least $1.5 billion in NZ. Accreditation is provided by a fee-based model, which estimated to cost between $10,000-$250,000 depending on the complexity of the application.

Yet there has been no resourcing in the Bill for inquisitorial or investigative powers to explore the potential for abuse of power by the ‘accredited digital identity service providers’ over time.

In 2021 the New Zealand government acknowledged that the digital ecosystem lacked coherence, and proposed a governance board which would have oversight as a ‘trust framework.’ In a news article, the Digital economy and Communications Minister David Clark stated that the

‘proposed law will allow individuals to have greater control over their data.’

The word ‘trust’ is repeated as a rhetorical device throughout the documentation, in an effort, I can only assume, to gain public support and therefore legitimation of the overall framework.

The Digital Identity Services Trust Framework Bill was drafted to govern and provide an opt in accreditation framework for the ‘Trusted Framework’ providers, the public and private institutions that would supply digital identity services.

The trust framework is the legal framework/structure that is intended to regulate the provision of digital identity services for transactions between individuals and organisations. This includes two administering bodies: a board and an authority. The board recommends rules and regulations to the Minister, while the authority acts as the accreditation regime, enforcing the rules recommended by the board.

Policy documents describe parties to the trust framework as users, TF providers and relying parties. The user is an individual who shares personal or organisational information with a relying party, using the accredited service provided by the Trusted Framework (TF) provider. The user may do this on behalf of themselves or on behalf of an organisation.

Once the Trust Framework is in place the government would take steps to accredit RealMe services. It’s problematic that the DIA oversees both RealMe and the governance system that is meant to accredit it.

The board has no investigative powers. The board is not responsible for reviewing the global environment to assess potential short or long term risks, in order to triangulate New Zealand’s rules and operations with global challenges and new knowledges. Under section 93, the authority could suspend or cancel an accreditation due to an act or omission that may pose a risk to

‘the security, privacy, confidentiality, or safety of the information of any trust framework participants or the integrity or reputation of the trust framework’.

However, with no inquisitorial powers, and importantly – resourcing for the authority to review the global environment in order to identify potential harms, it is unlikely the board’s efforts will be anticipatory.

New Zealand’s primary digital identity lobby group’s membership draws together tech companies, government agencies, management consultancies, including Google and Microsoft. Civil society groups are not in this association, nor are academic institutions researching digital technology and stewardship. A search on Google Scholar for ‘digital, identity, ethics, Zealand, Aotearoa’ results in a dearth of literature. Digital Identity NZ (DINZ) assembled prior to policy development. In 2019 DINZ led anti-money laundering

They conducted research which explored the experiences of and opinions of New Zealanders concerning the security of online personal information and digital identities. Then DINZ and colonised consultation and decision-making processes. The organisation includes public sector agencies, banks, but not institutions concerned with democracy, ethics and human rights.

a. Prior to the digital TRUST Bill – 2019-2020 consultations

The government has not consulted widely with the public. Rather, the government has constructed a Bill after wide consultation with industry and government.

I requested information from the DIA to understand the degree of public consultation (discussed here) that occurred throughout 2019-2020, i.e. prior to specific consultation on the proposed digital identity legislation.

A 2019 one week long consultation, appears the most extensive consultation the general public were privy to. A second December consultation was held with Māori, specifically about digital identity.

Five documents fell within the scope of the request.

August 2019. UMR research proposal. Digital Identity Research. 8 focus groups with a final report September 30, 2019. (p.1-24/156)

September 2019. Key findings UMR presentation to DIA of the 8 focus groups. (pp. 25-75/156)

December 2019. AATEA Digital Identity Research Report (pp.77-85/156)

June 2019. Project Services research. Yabble. (pp. 86-118/156)

DIA: Project Services. (pp.120-156)

UMR research proposal. Digital Identity Research. 8 focus groups

There was distinct awareness about the imbalance of power. As the report noted (28/156):

‘A plethora of reassurances would be required to get any scenario across the line. Applying to all scenarios, participants would like an independent agency that would monitor and enforce rules and for the originator of the data to be responsible for adhering to the rules.’

And on 47/156

‘A number of situations were recounted where people had felt uncomfortable providing information but had felt obliged to. Most were situations where there was an imbalance of power – seeking financial assistance, in the workplace, to access needed services, and when dealing with government services.’

Interestingly, participants were comfortable with portable information ‘related to sharing information between government agencies, and within the health sector.’ However, ASIAs were not discussed.

Many of the findings from these minor consultations have been ignored and whitewashed.

Māori

Particularly for Māori. Amazingly, the AATEA consultation with Māori was not included as an item in the Bills Digest. In the Cabinet material the government refers to having consulted Māori – but does not cite the document, link to them not the reflect concerns around trust and the Crown. It should have been uploaded to the portal.

Both the UMR and the AATEA report clearly outlined the low level of trust in government. While the Cabinet material notes this – this does not translate into more comprehensive aka trustworthy governance, and protections to prevent abuse of power.

Instead, ultimately the Cabinet paper pivots to a concern for Te Ao Māori approaches to identity and tikanga processes; not to the acute and endemic issue of trust and the potential for abuse of power by Crown agencies.

The problem here is that Māori, as with pakeha are dually vulnerable to abuse of power from weak regulators. But Māori have felt the back end of Crown since colonisation. They are experts in this space.

So, focus on Te Ao Māori – without ensuring regulators are authorized with inquisitorial powers –is superficial, disingenuous and misleading.

Effectively the Māori advisory group would be toothless at addressing Rights abuses. It’s important to note that the role of the Māori Advisory Group is to advise the board on Māori interests and knowledge, and cultural perspectives.

No-one is tasked to look at internal corruption.

b. 2020 Establishing a Trust Framework: Cabinet papers/RIS

A June/July 2020 policy papers proposed a for a future ‘Trust Framework’. They focused on ensuring the future framework would be trusted, coherent and sustainable. Eight governing principles were suggested to guide the development of New Zealand’s Trust Framework:

‘people-centred, inclusive, secure, privacy-enabling, enabling of Te Ao Māori approaches to identity, sustainable, interoperable, and open and transparent. These principles align with the principles in the Data Protection and Use Policy (DPUP), developed by the Social Wellbeing Agency and work will continue to ensure ongoing alignment between the DPUP and the Trust Framework.’

These early policy statements emphasise convenience, trust and usability. Governance and accreditation involve assessment of the provider parties, and the policy doesn’t delve into deeper issues of risk relating to the issues of potential conflicts of interest and abuse of power by providers, including governments.

The June 2020 policy paper stated (pp.33/58):

‘To ascertain the views of these stakeholders, extensive consultation was undertaken both with individuals and over 100 public, private and non-governmental entities. This was achieved through face to face meetings, regular workshops, surveys and focus groups over an 18 month period.’

Digital service providers involved in the consultation included MATTR, SSS online security consultants, Planit software testing, Middleware Solutions, SavvyKiwi, Sphere Identity and Xero. Banks involved included Westpac, ASB, KiwiBank, ANZ, BNZ, Payments NZ and PartPay.

Other relevant public sector groups such as Veracity Labs, who in another world, would be considered a ‘stakeholder’ were not invited.

The public were not broadly invited, and most civil society groups had no idea these processes were underway. Neither public meetings, nor investigative journalism, nor academic enquiry have unpicked how the architecture would be guided at high level, nor critically assessed regulatory gaps and the potential for abuse of power, driven not just by financial, but political interests.

In contrast to this extensive consultation, organisations and the public not party to the 18 month consultation, were given from October 27 – December 2, 2021, 26 workdays, to submit to the Digital Identity Services Trust Framework Bill.

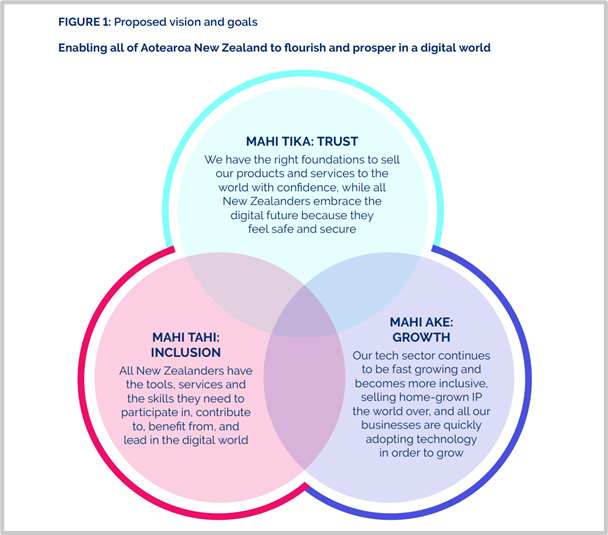

c. October 2021. Towards a Digital Strategy for Aotearoa

I consider that the public were performatively granted a chance to input one month prior consultation on the Digital Identity Services Trust Framework Bill -to a discussion document Towards a Digital Strategy for Aotearoa.

This is because the infrastructure/framework of the Bill - the key legal document - had already been bedded down.

Submissions from October 6 - November 10 were opened for a discussion document: Towards a Digital Strategy for Aotearoa, with. The Summary of Public Engagement was published in April 2022. We can presume that the drafting of the digital identity Bill was not connected to this consultation.

The Towards a Digital Strategy document did not discuss the role of democracy and digitisation, the potential risks that arise from largely opaque frameworks where barriers to democratic norms such as transparency and accountability, could easily arise. Instead, trust, inclusion and growth were promoted. While benefits were discussed, risks such as exploitation of private data by multinational providers, remained unarticulated.

Groups did submit. Veracity Labs proposed a much broader, visionary, stewardship architecture. They including that New Zealand commit to ‘high-quality standards and protocols for services that are hosted onshore’; a separate governing Ministry; and greater systemic accountability. Veracity Labs stated that:

Ensure all decisions and actions taken in government that are subject to Administrative Law are explainable, auditable, and appealable, in real time (or as close as possible thereto), with automatic digital traceability to relevant legal authorities, available to all New Zealanders.

I was involved in drafting the Physicians and Scientists for Global Responsibility (PSGR) submission. PSGR cautioned that ‘the Document provides little assurance that fundamental rights of the individual would be protected – and prioritised – especially against claims of a ‘generalised public interest.’

PSGR drew attention to the rhetorical use of the word ‘trust’ but the absence of meaningful discussion on how trust should be assured, particularly when weakly understood constitutional protections are balanced against asymmetrical institutional power.

In September 2022 the summary of public submissions to the discussion document - Digital Strategy for Aotearoa was released. Trust, inclusion and growth were retained as the key themes, along with the October theme logo. The Digital Strategy does not discuss ethics, nor stewardship dilemmas, but focusses on positivistic deployment of digital infrastructure, and allied economic growth.

Governments have tended to develop digital identity schemes without constitutional and public oversight. Most legal scholarship has focused on the right to privacy. As a result, the human rights implications of the digital ID industry remain largely unexplored, and all too often, implementing frameworks are weakly worded.

d. November 2021. Digital Identity Services Trust Framework Bill : The Public Submission Process

The consequent narrow scope of the Bill produced an effect, where the public submissions were claimed by the select committee to be outside the frame of reference. Therefore, the majority of public submissions dismissed by the Economic Development, Science and Innovation Committee as out of scope. Yet the Bill lacked high level purposes or principles that promote accountability, guide decision-making and future rule-making, and help navigate opaque digital environments.

Public good submissions which spoke directly to the Bill, and risk relating to public interest stewardship issues were not responded to by the committee.

For example, the New Zealand Council for Civil Liberties submitted recommendations that increased public participation, accountability and oversight, including to prevent conflicts of interest.

Institutions such as Veracity Labs, with expertise in the digital landscape, called for the establishment of values-based principles in their submission to the Bill. Veracity Labs acknowledged pervasive asymmetries of power, stating that the ‘digital technologies we depend on are predominantly developed by large, offshore organisations with near-monopolistic control.’ Their recommendations included shaping local tech development away from a ‘“buy it, configure it, use it” mode of digital technology use’ recognising the need for governance frameworks that protect citizen data from offshore exploitation. As they stated ‘a national, coordinated response is required, before principles such as truth, fairness, and democracy on which our national sovereignty is based are catastrophically eroded.

PSGR submitted that the Bill’s focus on narrow questions of governance board and accreditation practicalities rather than the overarching policy or public interest questions of policy, in combination with exclusion of the public from policy development, meant that the Bill failed to be people centric. The PSGR stated that the supporting documentation was inadequate, noting that:

‘Policy papers supporting this DIB and the Bill itself focus on reporting systems, and give no indication that any anticipatory regulation will occur to actively prevent harm. It is not evident that these institutions will have adequate powers of scrutiny, and the regulatory teeth, both to anticipate error and malfeasance, and to monitor and analyse the boundaries of risk and prevent harm before the harm has occurred.’

None of these submissions were heeded in the Select Committee Commentary, nor represented in amendments.

Over 3,000 submissions expressed concern relating to the potential for digital identity systems to be repurposed for political and/or purposes. They were not viewed as relating to the ‘content of this bill’. This is because their submission alluded to issues of democracy, state power, human rights, and coercion, in relation to digital identity systems which effectively act as passports for a broad spectrum of human life.

The Digital Identity Services Trust Framework Bill Commentary stated that many submissions drew attention to digital identity systems to be repurposed technologies for use in social credit systems, to be toggled to access to general freedoms (such as drivers licences) and the potential for digital identities to be toggled to access to permissions for digital currencies.

As the commentary confirms, submissions to the Bill occurred at the same time that the Hon Chris Hipkins had released legislation requiring the public to comply with vaccination in order to access services, events and amenities. On November 23, COVID-19 Response (Vaccinations) Legislation Bill was introduced, no public consultation was permitted and it received Royal Assent two days later.

While it was understood from 2020 that COVID-19 risk was highly stratified, Hipkins worked to introduce vaccine passports, to acclimatise the population to surveillance through the registering of seemingly benign QR codes at an unprecedented scale.

There is no doubt that the vaccine industry envisage that biometrics, biometric ID tracing and smartphone app tracing are key technologies, explaining that

‘Vaccine tracing via biometrics and smartphone technology would be crucial in enabling necessary follow-ups in the case of required booster shots for the coronavirus vaccine’.

Evidence from Israel illustrated, from March 2021, that digital passports could be rolled out quickly. This was why in consultation to the Bill New Zealand public expressed concern that vaccine passports would not only be required for the current pandemic, but that they could be rolled out in future. They understood that the processes already observed, of using an emergency to introduce novel technology, of short-circuiting safety trials, of regulatory oversight that exclusively relied on vaccine industry science, and of digitalisation and mandates contained grave precedents for future emergencies.

Yet the Economic Development, Science and Innovation Committee dismissed these claims, stating ‘none of these ideas are related to the content of the Bill’. Therefore, it would appear, that 3,600 submissions were out of scope.

This is because nothing in the purpose of the Bill drew attention to broader ethical and public law issues:

The purpose of the Bill established this orientation very precisely:

The purposes of this Act are—

(a) to establish a legal framework for the provision of secure and trusted digital identity services for individuals and organisations:

(b) to establish governance and accreditation functions that are transparent and incorporate te ao Māori approaches to identity.

The Bill’s purposes instead concern technical (structural) practicalities, rather than constitutional or fiduciary considerations that could more broadly encompass protective – guiding - stewardship obligations and principles. Such purposes do not for example, build in an obligation for the board to survey other jurisdictions and identify potential problem areas where there was institutional corruption and where the public may be at risk of exploitation.

The purposes identified how the legal framework would be structured for the provision of secure and trusted digital identity services, the accreditation processes as this impacted the providers, and the setting up of a governance board. Apart from the obligation to honour the Treaty of Waitangi, the only principles drafted into the Digital Identity Services Trust Framework Bill concern processes for dealing with complaints and offences, down at section 67.

In summary, the Bill lacks over-riding stewardship purposes and principles. I believe this amounts to sustained, and intentional ethics washing.

Foresight is apparently, outside scope.

‘TRUST’ RHETORIC NOT BUTTRESSED BY A ROBUST POLICY FRAMEWORK

There is a consistent absence of any meaningful regulatory entity that has the inquisitorial power to explore the potential for the identities of civil society to be abused by political and financial interests, public or non-public.

There is a great deal of social science demonstrating how vested interests benefits when harm and risk remain unarticulated, and regulators lack autonomy. Complex legal, political and ethical risks concerning the potential for the Trust Framework legislation, and the associated Privacy Act to act in concert to slowly but inevitably erode rights remain unsaid. In this void, and with patterns of authoritarian measures in democratic nation-states globally, it seems increasingly likely sweeping measures will act to shepherd behaviour and drive compliance, while removing capacity for dissent. Digital identity system framework white pages and policies might refer to human rights, but they do not articulate the upstream rights-based risks. The Privacy Act only has $2 million for compliance and the Trust Framework lacks teeth.

Schemes will involve a myriad of private sector actors having access to data. The technologies, incorporating machine learning and algorithmic tech, are hidden behind commercial in confidence agreements. The larger providers, including banks and tech developers are financially and politically affiliated with big data, the global management consultancies. Most are partially owned by investment management companies with trillion dollar asset portfolios.

It’s no surprise that regulators would lack teeth to look at broader abuse of power, and risk to democratic life. A 2022 white paper, Paving a Digital Road to Hell, pointed out that most of the legal and technological design and implementation have their origins in overlapping global networks of digital ID promoters.

This is not just an exclusively New Zealand problem. A recent release from the U.S. White House provides an example of how discourse relating to the digital currency (digital asset) landscape focuses on responsibility as protection from commercial risks – exploitation, fraud and hacking, rather than the drawing attention to the potential central bank digital currencies to be weaponised to manage citizen behaviour.

Human rights have been retained outside policy discourse, and there has been no meaningful engagement with human rights organisations. A statement by New Zealand’s Attorney General, the Hon David Parker that the Digital Trust Framework Bill would not erode human rights, principally concerned how the Bill impacts applicants (corporations supplying the services), and the governance board.

Parker also – only focussed on te ao Māori approaches to identity – not the potential for broader systemic corruption. David Parker’s scope was narrow, just like the Bill.

FUNCTION CREEP

Long-term, systemic risks to human rights remained outside his frame of reference,

How might this happen? Biometrics are already embedded in RealMe. Applicants for a RealMe identity must upload a facial image. The loss of citizens’ rights, is most likely to occur through accretion. By the adoption of RealMe across government as the ‘convenient’ service provider. Digital IDs are instrument of access for digital currency, in the form of a universal basic income or stimulus bonus, certain conditions must be fulfilled.

(Note: yes, most currency is digital, central bank digital currency is promoted by multinational institutions such as the IMF and the WEF. To be clear – sovereign governments already ‘print’ digital money (explainers here, here and here) and such a CBDC mechanism is not required. We ‘just’ need a working democracy that ensures that this (kind of) ‘loophole’ is not solely exploited for billion dollar emergencies while nutrition, education, agriculture, health and public good science are systematically degraded and deprived of funding. The main risk is inflationary risk.)

Profiling, and the requirement that certain conditions are required for access might not be imposed initially, but could be swiftly toggled in later.

Appropriate behaviour might include low-carbon travel or dietary choices, fulfilment of vaccination obligations, and by steering clear of so-called misinformation or disinformation. Social credit systems are currently operationalised in China, who’s influence on the world stage is scaling up.

Lacking an independent media in New Zealand, and with scanty appellate processes for rights abuses, such a process could be rapidly scaled up in a severe economic downturn, following government claims regarding cyber-security risks, or in another pandemic.

Of course, these coercive mechanisms are not currently in place. They are anticipated – as function creep.

The white paper drew attention to the moral jeopardy of function creep, where new or upgraded digital systems can be redesigned for multiple purposes that are unforeseen when the system was first designed. Organizations directing funding to promote identification services, such as the Bill and Melinda Gates Foundation, have extensive interests in the WHO, and in vaccine deployment.

An April 2022 Freedom of Information request (Department of Health and Social Security, C50516) revealed the scope for delivery of a domestic pass for use in voluntary and mandatory settings, on the surface as a prospective pass for high risk venues. While the discuss concerned COVID-19, it is not infeasible to see that the functionality will be in place for future events:

‘In preparation for this eventuality, we have built the changes to support two levels of domestic passes. The functionality will be toggled off until required. This enables a quick response if/when the Government invokes a mandate. If a citizen is fully vaccinated, medically exempt or has been in a clinical trial, they will be eligible for an ‘all venues’ (mandatory) pass. If a citizen only has natural immunity or negative test results, they will only be eligible for a ‘limited venues’ (voluntary) pass.

We can already see Privacy Commissioner contemplating that CBDCs could ‘support the uptake and use of RealMe’. This raises questions concerning why public behaviour would require shepherding via financial incentives, and just how incentivised the broader machinery of government is, to ensure that all citizens are signed up to RealMe.

How for example, how AI and machine learning might work inside any identity system data is a blind spot. Artificial intelligence is mooted to converge around a handful of ethical principles, namely: transparency and accountability, justice and fairness, non-maleficence, responsibility, and privacy, but what happens when the rubber meets the road? How does this work in our political-legal infrastructure? Right now it is impossible to understand how digital identity, biometric data and our surveillance systems might converge. Al systems can be inaccurate, and/or promote bias and this has led to wrongful arrest, unjust criminal sentencing, and incorrect patient treatment.

New Zealand civil society is possibly unaware that tech industry lobby group, the World Economic Forum is spearheading a multistakeholder, ‘evidence based’ policy project in partnership with the Government of New Zealand. As the WEF white paper Reimagining Regulation for the Age of AI: New Zealand Pilot Project states:

The project aims at co-designing actionable governance frameworks for AI regulation. It is structured around three focus areas: 1) obtaining of a social licence for the use of AI through an inclusive national conversation; 2) the development of in-house understanding of AI to produce well-informed policies; and 3) the effective mitigation of risks associated with AI systems to maximize their benefits.

From what the paper states, these focus areas appear to be guided by frameworks and guidelines developed by the World Economic Forum’s Centre for the Fourth Industrial Revolution – under the Platform for Shaping the Future of Technology Governance: Artificial Intelligence and Machine Learning. But of course, this is outside civil society, and developed offshore by an institution whose partners are ‘global companies developing solutions to the world’s greatest challenges.’

What we observe, once again, is development of policy and processes, at arm’s length from civil society. But, as with when we contract and outsource with offshore, globally owned management consultancy firms, (who also partner with WEF) – we shoot ourselves in the foot. The knowledge gets sucked up into these international organisations, and the partnerships with democratic governments, the quality of advice, largely occurs out of site.

OUTSOURCING SOVEREIGNTY

External contracting of services and advice makes already blacboxed digital technology environments even more opaque. From the 1980’s onwards, the outsourcing expertise has increased as governments pivoted to, smaller government, global and market-facing, economic-growth oriented management cultures.

As economist Mariana Mazzucato has outlined in Mission Economy, three themes underpinned these changes: privatisation, public-private ventures and outsourcing. Now, in her latest book, Mazzucato is more blunt. She reckons it is a big con.

In 2021, New Zealand spent nearly NZ$1 billion outsourcing external contractors and consultants (ECCs). (Currently, the DIA is delaying my OIA request for total expenditure on management consultants for delivering on Three Waters.)

Over the same period central government actions have weakened the power of local governments. Centralisation continues apace in New Zealand, with the state persistently shifting decision-making power away from local communities with scant, or performative consulting processes. Health, resource management and local control of emissions and exposures has largely been withdrawn from local communities, with increasing centralisation of health care, the three waters platform, fluoridation and 5G/4G technology oversight.

Centralisation is controversial as it removes local accountability regarding services that directly impact local health, but for which harm and risk can occur slowly and be difficult to establish. Performative, after the fact community consultation has functioned as an effective rubber stamp for Cabinet to claim legitimacy of the sustained transition away from local and regional control. Health care and water services in the current governing climate are now more than ever, at risk of accelerated privatisation (through outsourcing via contracts – and sub-contracts) for service delivery.

In the health sector, expenditure on ECCs increased from $60 million in 2016 to $160 million in 2019. While ECC expenditure is a small proportion of the overall budget, Akmal et al (2021) demonstrate that ECC in health is already plagued by unclear procurement policies and procedures, inconsistent and generally inadequate reporting.

Mazzacato maintains that ECCs can’t be guaranteed to be more efficient, citing UK experiences with management consultancy firms. In addition, there can be ethical failures, such as when McKinsey was assigned to fast tract migrant visas in Germany, but the consequent residency status denied migrants key rights. Accountability and transparency can be undermined through contracting and consultancy processes, particularly when it comes to highly complex, and technical projects.

‘Use of consultants also raises important questions of accountability, especially when one of their projects goes wrong, and conflicts of interest – for example, when a consultant works simultaneously for a global health client and a client in a sector such as coal, which harms healthy. Unfortunately, the secrecy surrounding many consultancy contracts makes it hard to answer these questions definitively.

Schemes like PFI [Public Financing Initiative] and outsourcing involve complex contracts. Economic theories of contract and property rights are clear that the more complicated a product is, the greater the likelihood of asymmetries of information, whereby the seller – say a private provider of prison services – has more information than the buyer, the government. This leads to several difficulties for government. Trying to manage asymmetrical contracts and remedy their intrinsic weaknesses piles extra costs on the buyer. The government cannot give up its legal and political responsibilities to provide certain services, notably law and order and defence, so private providers can cut corners because the government will continue to pay for the service, at least until it can find an alternative way of providing for it – a case of ‘moral hazard’.’

More money is going to external contractors in the agency tasked with oversight for New Zealand’s digital architecture and identity systems. All of government contract expenses where the DIA is the lead agency, increased by 50% in the three years to 2021, from $421 to $635 million. While the list of locally owned contracted suppliers is impressive, many of them are partially owned by offshore global firms; and/or - while an independent legal entity for tax, regulatory and liability purposes, operate as subsidiaries of their offshore parent, or holding company. They’re not genuinely local.

With sub-contracting comes commercial in confidence clauses that directly benefit large monopolistic service providers. As Mazzucato has noted, it’s inordinately difficult to ‘hold private contractors accountable when supply chains are so long.’ For digital technologies, outsourcing expertise in highly technical environments , not only reduces the knowledge bank of expertise in the public sector, it leaves public sector management vulnerable to the vagaries of bidding for contracts, as they have little technical expertise to identify when contractors are taking advantage of the asymmetries of knowledge. This can then result in service-contract billing rates for ECCs that outstrip wages paid to public sector staff performing comparable duties in similar disciplines.

‘an endemic weakness of the outsourcing model of procurement, particularly for long term contracts, where contractors are tempted to underbid to increase market share and hope they can increase their margins as the project progresses.’

For Mazzucato

‘privatisation and outsourcing can remove tasks from people with long experience of doing them (civil servants) and give them to people whose experience may be much less (private companies). This is a matter of policy, not inherent capability… The consequence, however, has been to hollow out the government’s capacity, run down its skills and expertise and demoralise public servants…’

Mazzucato referred to UK Cabinet Office and Treasury minister Lord Agnew’s 2020 comments on ECCs, that they ‘infantilised’ Whitehall, but that it deprived ‘our brightest [civil servants] of opportunities to work on some of the most challenging, fulfilling and crunchy issues’ – effectively stunting public sector knowledge.

With less knowledge, government becomes more conservative, and managerial. In suppressed knowledge environments, discussions and issues of the public good, and ethics as they relate to policy and regulation, are less robust, as the government lacks an informed perspective – intelligence.

What these ECCs also do, is reposition, through policy proposals, but also negotiated relationship management – the introduction of technical service providers into key government roles – shifting governments closer to the position where corporate relationships cannibalise the place for public input into consultation. The place for a free society.

In the local expertise vacuum, there are no local researchers granted meaningful capacity for long term, funded enquiry – in such a way that might challenge elites in government agencies.

Which is why no-one really ‘get's’ RealMe - and why there’s little scholarly literature on New Zealand’s digital identity political and legal environment. It’s political.

We have theoretical academia, but in modern universities, we have little critical academia that can look across law, ethics and science. This is a highly political environment. In precarious academic environments, the work won’t be done if it might contradict state policy. Jobs are too hard to come by.

Correct me if I am incorrect.

It’s also unclear what other regulatory failures will occur in future. Will international court cases (precedents) be ignored? Will staff working in RealMe or on trust frameworks, exit through a revolving door, into to cushy industry positions because they have critical insight into our inner workings? Will the Human Rights Commissioner continue to helpless in the face rights limiting ‘emergencies’?

The current regulatory chasm appears where the public thought that the Digital Identity Services Trust Framework Bill would step in. But the proposed untrustworthy governance framework is - transparently - not going to achieve this.

Thanks for getting this far, I appreciate it.